https://www.oreilly.com/learning/how-to-build-a-robot-that-sees-with-100-and-tensorflow

Object recognition is one of the most exciting areas in machine learning right now. Computers have been able to recognize objects like faces or cats reliably for quite a while, but recognizing arbitrary objects within a larger image has been the Holy Grail of artificial intelligence. Maybe the real surprise is that human brains recognize objects so well. We effortlessly convert photons bouncing off objects at slightly different frequencies into a spectacularly rich set of information about the world around us. Machine learning still struggles with these simple tasks, but in the past few years, it’s gotten much better.

Deep learning and a large public training data set called ImageNet has made an impressive amount of progress toward object recognition. TensorFlow is a well-known framework that makes it very easy to implement deep learning algorithms on a variety of architectures. TensorFlow is especially good at taking advantage of GPUs, which in turn are also very good at running deep learning algorithms.

Building my robot

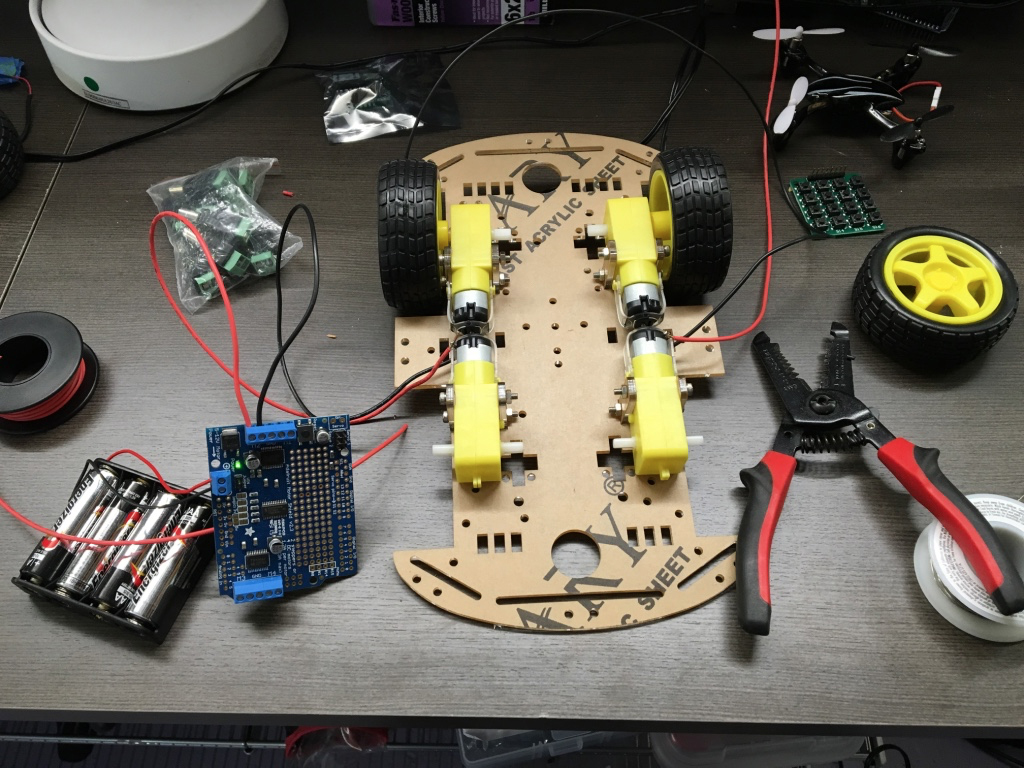

I wanted to build a robot that could recognize objects. Years of experience building computer programs and doing test-driven development have turned me into a menace working on physical projects. In the real world, testing your buggy device can burn down your house, or at least fry your motor and force you to wait a couple of days for replacement parts to arrive.

The Raspberry Pi needs about 2 amps of current, but 3 amps is safer with the speaker we’re going to plug into it. iPhone battery chargers work awesomely for this task. Small chargers don’t actually output enough amps and can cause problems, but the Lumsing power bank works great and costs $18.

A couple of HC-SR04 sonar sensors help the robot avoid crashing into things—you can buy five for $11.

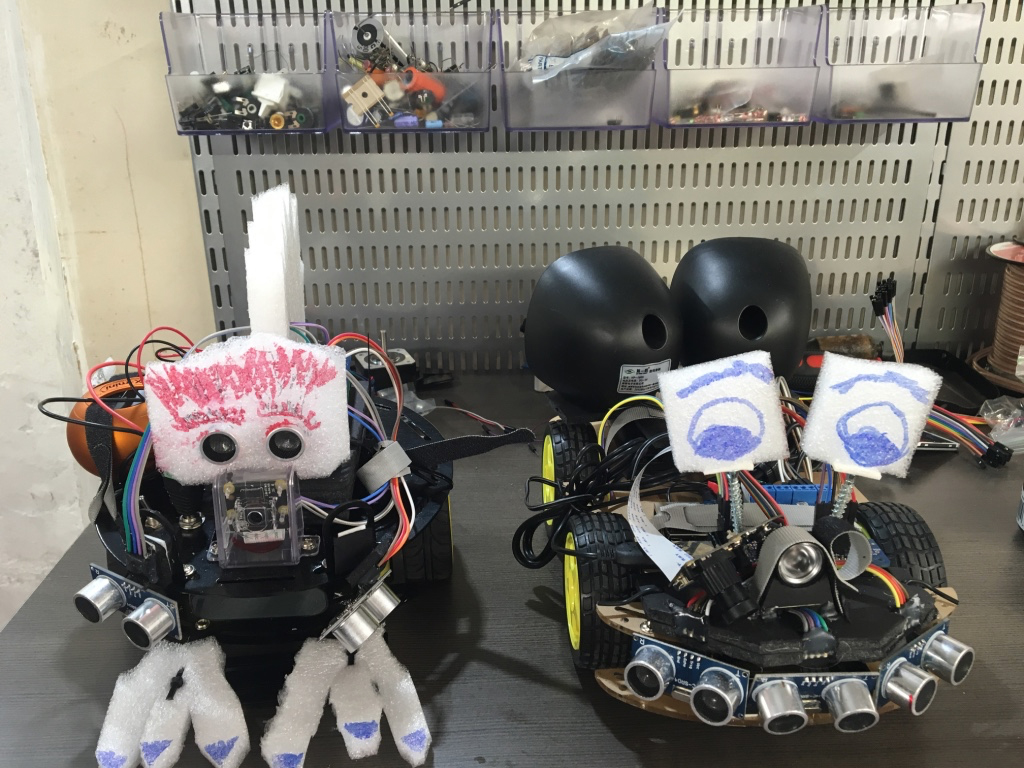

I added the cheapest USB speakers I could find, and used a bunch of zip ties, hot glue, and foam board to keep everything together. As an added bonus, I cut up some of the packaging materials the electronics came with and drew on them to give the robots some personality. I should note here that I actually built two robots (see Figure 5) because I was experimenting with different chassis, cameras, sonar placement, software, and so forth, and ended up buying enough parts for two versions.

For streaming the camera, the RPi Cam Web interface works great. It’s super configurable and by default puts the latest image from the camera in a RAM disk at

/dev/shm/mjpeg/cam.jpg.If you want to stream the camera data to a webpage (very useful for debugging), you can install Nginx, an extremely fast open source webserver/proxy. I configured Nginx to pass requests for the camera image directly to the file location and everything else to my webserver.

http {

server {

location / {

proxy_pass http://unix:/home/pi/drive.sock;

}

location /cam.jpg {

root /dev/shm/mjpeg;

}

}

}

I then built a simple Python webserver to spin the wheels of the

robot based on keyboard commands that made for a nifty remote control

car.As a side note, it’s fun to play with the sonar and the driving system to build a car that can maneuver around obstacles.

Programming my robot

Finally, it’s time to install TensorFlow. There are a couple of ways to do the installation, but TensorFlow actually comes with a makefile that lets you build it right on the system. The steps take a few hours and have quite a few dependencies, but they worked great for me.TensorFlow comes with a prebuilt model called “inception” that performs object recognition. You can follow the tutorial to get it running.

Running

tensorflow/contrib/pi_examples/label_image/gen/bin/label_image

on an image from the camera will output the top five guesses. The model

works surprisingly well on a wide range of inputs, but it’s clearly

missing an accurate “prior,” or a sense of what things it’s likely to

see, and there are quite a lot of objects missing from the training

data. For example, it consistently recognizes my laptop, even at funny

angles, but if I point it at my basket of loose wires it consistently

decides that it’s looking at a toaster. If the camera is blocked and it

gets a dark or blurry image it usually decides that it’s looking at

nematodes—clearly an artifact of the data it was trained on.

Testing my robot

Here are my two homemade robots running deep learning to do object recognition.Final thoughts

From 2003 to 2005, I worked in the Stanford Robotics lab, where the robots cost hundreds of thousands of dollars and couldn’t perform object recognition nearly as well as my robots. I’m excited to put this software on my drone and never have to look for my keys again.I’d also like to acknowledge all the people that helped with this fun project. My neighbors, Chris Van Dyke and Shruti Gandhi, helped give the robot a friendly personality. My friend, Ed McCullough, dramatically improved the hardware design and taught me the value of hot glue and foam board. Pete Warden, who works at Google, helped get TensorFlow compiling properly on the Raspberry Pi and provided amazing customer support.

Comments

Post a Comment