https://kevinzakka.github.io/2016/09/26/applying-deep-learning/

Nuts and Bolts of Applying Deep Learning

There were some super interesting talks from leading experts in the field: Hugo Larochelle from Twitter, Andrej Karpathy from OpenAI, Yoshua Bengio from the Université de Montreal, and Andrew Ng from Baidu to name a few. Of the plethora of presentations, there was one somewhat non-technical one given by Andrew that really piqued my interest.

In this blog post, I’m gonna try and give an overview of the main ideas outlined in his talk. The goal is to pause a bit and examine the ongoing trends in Deep Learning thus far, as well as gain some insight into applying DL in practice.

By the way, if you missed out on the livestreams, you can still view them at the following: Day 1 and Day 2.

Table of Contents:

- Major Deep Learning Trends

- End-to-End Deep Learning

- Bias-Variance Tradeoff

- Human-level Performance

- Personal Advice

Major Deep Learning Trends

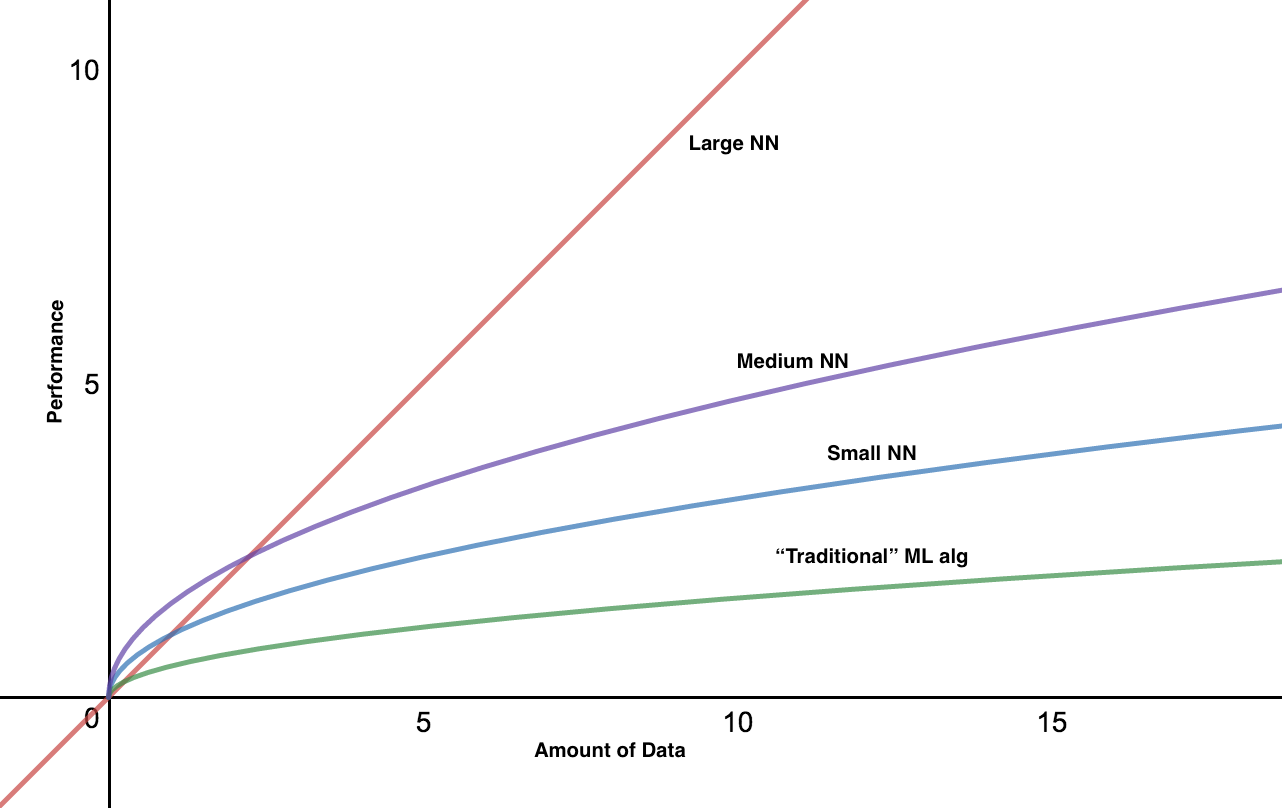

Why do DL algorithms work so well? According to Ng, with the rise of the Internet, Mobile and IOT era, the amount of data accessible to us has greatly increased. This correlates directly to a boost in the performance of neural network models, especially the larger ones which have the capacity to absorb all this data.

Thus this trend is more prevalent in the big data realm where hand engineering effectively gets replaced by end-to-end approaches and bigger neural nets combined with a lot of data tend to outperform all other models.

Machine Learning and HPC team. The rise of big data and the need for larger models has started to put pressure on companies to hire a Computer Systems team. This is because some of the HPC (high-performance computing) applications require highly specialized knowledge and it is difficult to find researchers and engineers with sufficient knowledge in both fields. Thus, cooperation from both teams is the key to boosting performance in AI companies.

Categorizing DL models. Work in DL can be categorized in the following 4 buckets:

The rise of End-to-End DL

A major improvement in the end-to-end approach has been the fact that outputs are becoming more and more complicated. For example, rather than just outputting a simple class score such as 0 or 1, algorithms are starting to generate richer outputs: images like in the case of GAN’s, full captions with RNN’s and most recently, audio like in DeepMind’s WaveNet.So what exactly does end-to-end training mean? Essentially, it means that AI practitioners are shying away from intermediate representations and going directly from one end (raw input) to the other end (output) Here’s an example from speech recognition.

The main take-away from this section is that we should always be cautious of end-to-end approaches in applications where huge data is hard to come by.

Bias-Variance Tradeoff

Splitting your data. In most deep learning problems, train and test come from different distributions. For example, suppose you are working on implementing an AI powered rearview mirror and have gathered 2 chunks of data: the first, larger chunk comes from many places (could be partly bought, and partly crowdsourced) and the second, much smaller chunk is actual car data.In this case, splitting the data into train/dev/test can be tricky. One might be tempted to carve the dev set out of the training chunk like in the first example of the diagram below. (Note that the chunk on the left corresponds to data mined from the first distribution and the one on the right to the one from the second distribution.)

Hence, a smarter way of splitting the above dataset would be just like the second line of the diagram. Now in practice, Andrew recommends creating dev sets from both data distributions: a train-dev and test-dev set. In this manner, any gap between the different errors can help you tackle the problem more clearly.

Human-level Performance

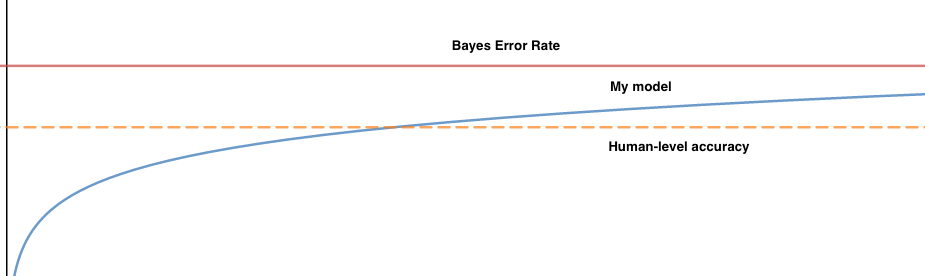

One of the very important concepts underlined in this lecture was that of human-level performance. In the basic setting, DL models tend to plateau once they have reached or surpassed human-level accuracy. While it is important to note that human-level performance doesn’t necessarily coincide with the golden bayes error rate, it can serve as a very reliable proxy which can be leveraged to determine your next move when training your model.

Here’s an example that can help illustrate the usefulness of human-level accuracy. Suppose you are working on an image recognition task and measure the following:

- Train error: 8%

- Dev Error: 10%

By the way, there’s always room for improvement. Even if you are close to human-level accuracy overall, there could be subsets of the data where you perform poorly and working on those can boost production performance greatly.

Finally, one might ask what is a good way of defining human-level accuracy. For example, in the following image diagnosis setting, ignoring the cost of obtaining data, how should one pick the criteria for human-level accuracy?

- typical human: 5%

- general doctor: 1%

- specialized doctor: 0.8%

- group of specialized doctors: 0.5%

Personal Advice

Andrew ended the presentation with 2 ways one can improve his/her skills in the field of deep learning.- Practice, Practice, Practice: compete in Kaggle competitions and read associated blog posts and forum discussions.

- Do the Dirty Work: read a lot of papers and try to replicate the results. Soon enough, you’ll get your own ideas and build your own models.

Comments

Post a Comment