http://www.computervisionblog.com/2016/12/nuts-and-bolts-of-building-deep.html

Nuts and Bolts of Building Deep Learning Applications: Ng @ NIPS2016

Figure 1. Andrew Ng delivers a powerful message at NIPS 2016.

Andrew Ng and the Lecture

Andrew Ng's lecture at NIPS 2016 in Barcelona was phenomenal -- truly

one of the best presentations I have seen in a long time. In a

juxtaposition of two influential presentation styles, the CEO-style and the Professor-style,

Andrew Ng mesmerized the audience for two hours. Andrew Ng's wisdom

from managing large scale AI projects at Baidu, Google, and Stanford

really shows. In his talk, Ng spoke to the audience and discussed one of

they key challenges facing most of the NIPS audience -- how do you make your deep learning systems better?

Rather than showing off new research findings from his cutting-edge

projects, Andrew Ng presented a simple recipe for analyzing and

debugging today's large scale systems. With no need for equations, a

handful of diagrams, and several checklists, Andrew Ng delivered a

two-whiteboards-in-front-of-a-video-camera lecture, something you would

expect at a group research meeting. However, Ng made sure to not delve

into Research-y areas, likely to make your brain fire on all cylinders,

but making you and your company very little dollars in the foreseeable

future.

Money-making deep learning vs Idea-generating deep learning

Andrew Ng highlighted the fact that while NIPS is a research conference,

many of the newly generated ideas are simply ideas, not yet

battle-tested vehicles for converting mathematical acumen into dollars.

The bread and butter of money-making deep learning is supervised

learning with recurrent neural networks such as LSTMs in second place.

Research areas such as Generative Adversarial Networks (GANs), Deep

Reinforcement Learning (Deep RL), and just about anything branding

itself as unsupervised learning, are simply Research, with a capital R.

These ideas are likely to influence the next 10 years of Deep Learning

research, so it is wise to focus on publishing and tinkering if you

really love such open-ended Research endeavours. Applied deep learning

research is much more about taming your problem (understanding the

inputs and outputs), casting the problem as a supervised learning

problem, and hammering it with ample data and ample experiments.

"It takes surprisingly long time to grok bias and variance deeply, but people that understand bias and variance deeply are often able to drive very rapid progress."

--Andrew Ng

The 5-step method of building better systems

Most issues in applied deep learning come from a training-data /

testing-data mismatch. In some scenarios this issue just doesn't come

up, but you'd be surprised how often applied machine learning projects

use training data (which is easy to collect and annotate) that is

different from the target application. Andrew Ng's discussion is

centered around the basic idea of bias-variance tradeoff. You want a

classifier with a good ability to fit the data (low bias is good) that

also generalizes to unseen examples (low variance is good). Too often,

applied machine learning projects running as scale forget this critical

dichotomy. Here are the four numbers you should always report:

- Training set error

- Testing set error

- Dev (aka Validation) set error

- Train-Dev (aka Train-Val) set error

Andrew Ng suggests following the following recipe:

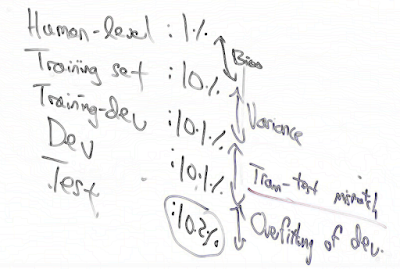

Figure 2. Andrew Ng's "Applied Bias-Variance for Deep Learning Flowchart"

for building better deep learning systems.

Take all of your data, split it into 60% for training and 40% for

testing. Use half of the test set for evaluation purposes only, and the

other half for development (aka validation). Now take the training set,

leave out a little chunk, and call it the training-dev data. This 4-way

split isn't always necessary, but consider the worse case where you

start with two separate sets of data, and not just one: a large set of

training data and a smaller set of test data. You'll still want to split

the testing into validation and testing, but also consider leaving out a

small chunk of the training data for the training-validation. By

reporting the data on the training set vs the training-validation set,

you measure the "variance."

Figure 3. Human-level vs Training vs Training-dev vs Dev vs Test.

Taken from Andrew Ng's 2016 talk.

In addition to these four accuracies, you might want to report the

human-level accuracy, for a total of 5 quantities to report. The

difference between human-level and training set performance is the Bias.

The difference between the training set and the training-dev set is the

Variance. The difference between the training-dev and dev sets is the

train-test mismatch, which is much more common in real-world

applications that you'd think. And finally, the difference between the

dev and test sets measures how overfitting.

Nowhere in Andrew Ng's presentation does he mention how to use unsupervised learning, but he does include a brief discussion about "Synthesis." Such synthesis ideas are all about blending pre-existing data or using a rendering engine to augment your training set.

Conclusion

If you want to lose weight, gain muscle, and improve your overall physical appearance, there is no magical protein shake and no magical bicep-building exercise. The fundamentals such as reduced caloric intake, getting adequate sleep, cardiovascular exercise, and core strength exercises like squats and bench presses will get you there. In this sense, fitness is just like machine learning -- there is no secret sauce. I guess that makes Andrew Ng the Arnold Schwarzenegger of Machine Learning.

What you are most likely missing in your life is the rigor of reporting a handful of useful numbers such as performance on the 4 main data splits (see Figure 3). Analyzing these numbers will let you know if you need more data or better models, and will ultimately let you hone in your expertise on the conceptual bottleneck in your system (see Figure 2).

With a prolific research track record that never ceases to amaze, we all know Andrew Ng as one hell of an applied machine learning researcher. But the new Andrew Ng is not just another data-nerd. His personality is bigger than ever -- more confident, more entertaining, and his experience with a large number of academic and industrial projects makes him much wiser. With enlightening lectures as "The Nuts and Bolts of Building Applications with Deep Learning" Andrew Ng is likely to be an individual whose future keynotes you might not want to miss.

Nowhere in Andrew Ng's presentation does he mention how to use unsupervised learning, but he does include a brief discussion about "Synthesis." Such synthesis ideas are all about blending pre-existing data or using a rendering engine to augment your training set.

Conclusion

If you want to lose weight, gain muscle, and improve your overall physical appearance, there is no magical protein shake and no magical bicep-building exercise. The fundamentals such as reduced caloric intake, getting adequate sleep, cardiovascular exercise, and core strength exercises like squats and bench presses will get you there. In this sense, fitness is just like machine learning -- there is no secret sauce. I guess that makes Andrew Ng the Arnold Schwarzenegger of Machine Learning.

What you are most likely missing in your life is the rigor of reporting a handful of useful numbers such as performance on the 4 main data splits (see Figure 3). Analyzing these numbers will let you know if you need more data or better models, and will ultimately let you hone in your expertise on the conceptual bottleneck in your system (see Figure 2).

With a prolific research track record that never ceases to amaze, we all know Andrew Ng as one hell of an applied machine learning researcher. But the new Andrew Ng is not just another data-nerd. His personality is bigger than ever -- more confident, more entertaining, and his experience with a large number of academic and industrial projects makes him much wiser. With enlightening lectures as "The Nuts and Bolts of Building Applications with Deep Learning" Andrew Ng is likely to be an individual whose future keynotes you might not want to miss.

Appendix

You can watch a September 27th, 2016 version of the Andrew Ng Nuts and Bolts of Applying Deep Learning Lecture on YouTube, which he delivered at the Deep Learning School. If you are working on machine learning problems in a startup, then definitely give the video a watch. I will update the video link once/if the newer NIPS 2016 version shows up online.

You can also check out Kevin Zakka's blog post for ample illustrations and writeup corresponding to Andrew Ng's entire talk.

Comments

Post a Comment